The following example shows a plugins.zip file with a flat directory structure for Apache Airflow v1. sensorsenabled: This config is a list of sensor class names that will use the smart sensor.The users use the same class names (e.g. shards: This config indicates the number of concurrently running smart sensor jobs for the airflow cluster. Name = 'virtual_python_plugin' Apache Airflow v1 usesmartsensor: This config indicates if the smart sensor is enabled.

#Airflow 2.0 plugins software

IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM, OUT OF OR INĬONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE SOFTWARE.įrom ugins_manager import AirflowPluginĭef _generate_virtualenv_cmd(tmp_dir: str, python_bin: str, system_site_packages: bool) -> List:Ĭmd = Ī_virtualenv._generate_virtualenv_cmd=_generate_virtualenv_cmdĬlass VirtualPythonPlugin(AirflowPlugin): Repositories boxplugin 3 Apache-2.0 4 salesforceplugin 32 Apache-2.0 24 googleanalyticsplugin 41 Apache-2.0 37 marketoplugin 0 Apache-2.0 5. Step 1, define you biz model with user inputs Step 2, write in as dag file in python, the user input could be read by airflow variable model. IN NO EVENT SHALL THE AUTHORS ORĬOPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER LIABILITY, WHETHER Use Airflow Variable model, it could do it. IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY, FITNESSįOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR The Software, and to permit persons to whom the Software is furnished to do so. Use, copy, modify, merge, publish, distribute, sublicense, and/or sell copies of The Software without restriction, including without limitation the rights to This software and associated documentation files (the "Software"), to deal in

#Airflow 2.0 plugins free

Permission is hereby granted, free of charge, to any person obtaining a copy of The Apache Airflow Scheduler and the Workers look for custom plugins during startup on the AWS-managed Fargate container for your environment at /usr/local/airflow/plugins/ *.Ĭopyright, Inc. The following section provides an example of flat and nested directory structures in a local development environment and the resulting import statements, which determines the directory structure within a plugins.zip. It allows you to use custom Apache Airflow operators, hooks, sensors, or interfaces. To learn more, see Modules Management and Creating a custom Operator in the Apache Airflow reference guide.Īpache Airflow's built-in plugin manager can integrate external features to its core by simply dropping files in an $AIRFLOW_HOME/plugins folder. airflowignore file to exclude themįrom being parsed as DAGs. In v2 and above, the recommended approach is to place them in the DAGs directory and create and use an. These extensions should be imported as regular Python modules. Importing operators, sensors, hooks added in plugins using airflow. Python API Reference in the Apache Airflow reference guide. For example, from _hook import AwsHook in Apache Airflow v1 has changed toįrom .hooks.base_aws import AwsBaseHook in Apache Airflow v2. On Amazon MWAA have changed between Apache Airflow v1 and Apache Airflow v2. The import statements in your DAGs, and the custom plugins you specify in a plugins.zip Poke_interval ( int) - Poke interval to check dag run status when wait_for_completion=True.New: Operators, Hooks, and Executors. Wait_for_completion ( bool) - Whether or not wait for dag run completion.

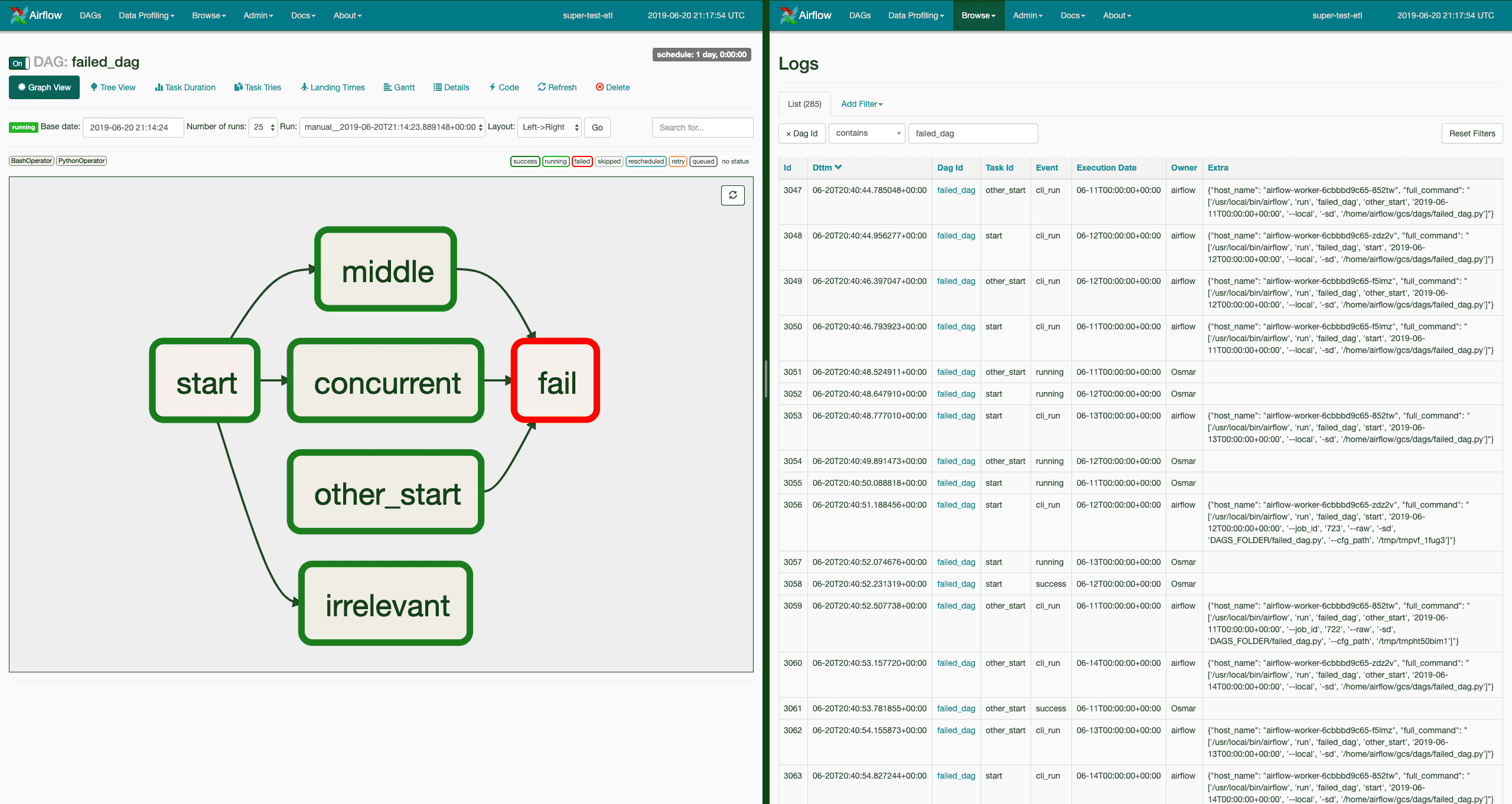

When reset_dag_run=True and dag run exists, existing dag run will be cleared to rerun. When reset_dag_run=False and dag run exists, DagRunAlreadyExists will be raised. This is useful when backfill or rerun an existing dag run. Reset_dag_run ( bool) - Whether or not clear existing dag run if already exists. Trigger_dag_id ( str) - the dag_id to trigger (templated)Ĭonf ( dict) - Configuration for the DAG runĮxecution_date ( str or datetime.datetime) - Execution date for the dag (templated) There’s also a need for a set of more complex applications to interact with different flavors of data and metadata.

Plugins can be used as an easy way to write, share and activate new sets of features. Triggers a DAG run for a specified dag_id Parameters Using Airflow plugins can be a way for companies to customize their Airflow installation to reflect their ecosystem. It also serves as a user interface for many of the OpenSearch plugins, including. TriggerDagRunOperator ( *, trigger_dag_id : str, conf : Optional = None, execution_date : Optional ] = None, reset_dag_run : bool = False, wait_for_completion : bool = False, poke_interval : int = 60, allowed_states : Optional = None, failed_states : Optional = None, ** kwargs ) ¶ OpenSearch Dashboards is the default visualization tool for data in OpenSearch. name = Triggered DAG ¶ get_link ( self, operator, dttm ) ¶ class _dagrun. It allows users to accessĭAG triggered by task using TriggerDagRunOperator.

0 kommentar(er)

0 kommentar(er)